Embarking on the Exploration: Fundamentals of Binary Exploitation on Linux

12 min read

January 27, 2023

Introduction

Embarking on a journey to unravel the intricacies of binary exploitation techniques, I’m excited to share my experiences in this series. While it’s admittedly one of the trickier topics to tackle, especially for beginners, I’ve decided to take the plunge in 2023! 😅 My guide of choice is the remarkable Nightmare course, supplemented by additional resources listed below. So, let’s dive into the fascinating world of binary exploitation!

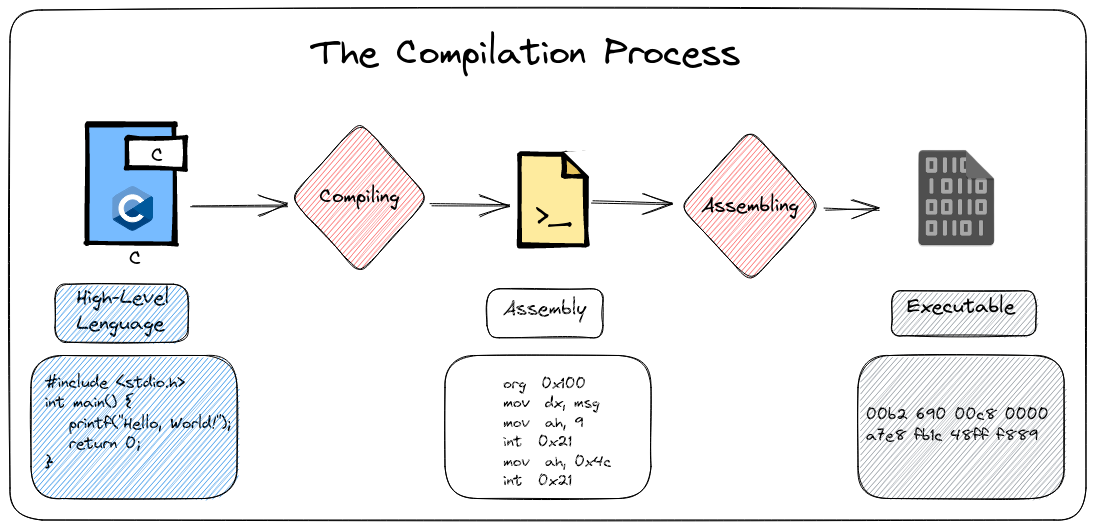

The compilation Process

The compilation process serves as the bridge, translating high-level language code like C into the machine’s language—binary code. This binary language is a collection of instructions or operation codes (opcodes), essentially the commands that the processor follows and stores in memory.

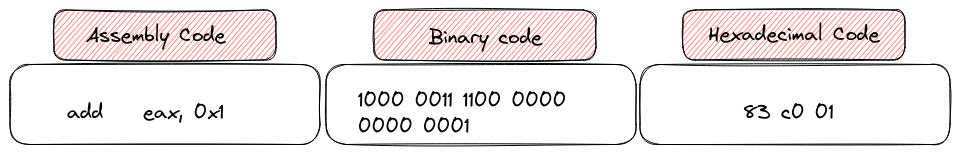

Different ways of saying the same thing

To make these instructions more readable, we opt for a hexadecimal representation rather than the binary format. This switch to hexadecimal isn’t just a technical choice; it’s a practical one, enhancing the ease with which developers and analysts can work with and understand these instructions during the debugging or analysis stages.

Memory content

Now, let’s talk about the fascinating evolution of code during this compilation journey. The high-level code transforms into something called assembly code. Think of assembly code as the machine’s language, but presented in a way that we humans can grasp. It’s like a bridge between our human-friendly code and the machine’s binary instructions.

This brings us to the next step: assembling. It’s the magical process where assembly code gets translated into opcodes, the fundamental building blocks of the executable program. This is where the transformation from readable instructions to the machine-understandable language truly takes shape.

The compilation process

Various architectures operate with distinct assembly codes. In my exploration, I’ll be delving into x64 bit and x32 bit ELF (Executable and Linkable Format) architectures. Adding to the richness of this journey, there are two primary assembler syntaxes in play: Intel and AT&T.

Let’s take a moment to appreciate the nuance between these syntaxes. In the Intel syntax, the target register takes the lead, listed first, while the source register follows—essentially the reverse of the AT&T syntax. It’s a subtle yet crucial distinction that sets the tone for how we communicate with the processor in these different assembly languages. This diversity adds a layer of complexity to the understanding of these architectures, making the exploration all the more intriguing!

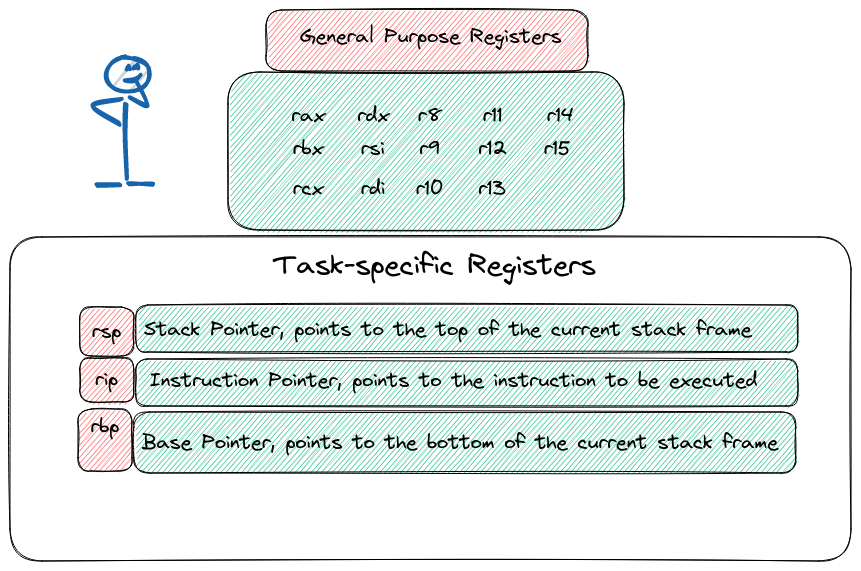

Registers

Registers, in the realm of computing, act as dedicated spaces where the processor can store both memory addresses and hexadecimal data to execute instructions—picture them as if they were the local variables of the processor. Within this intricate world, some registers serve specific functions, while others maintain a more general nature. The upcoming sections will delve into a detailed exploration of these registers, shedding light on their distinctive roles and functionalities as we progress through the article

Registers summary

In the realm of x64 architectures, registers take on the role of handling function arguments. Each register has its designated purpose in this symphony:

- rdi: First Argument

- rsi: Second Argument

- rdx: Third Argument

- rcx: Fourth Argument

- r8: Fifth Argument

- r9: Sixth Argument

However, the scenario shifts when we dive into x32 architectures. Here, the stack becomes the messenger, carrying the burden of passing arguments to functions. It’s worth noting that in languages like C, functions always yield some value. This returned value plays a pivotal role, with rax taking on the responsibility in x64 architectures and eax assuming the mantle in x32 architectures. It’s a dance of registers and stacks, orchestrating the flow of information and results in the intricate world of function calls.

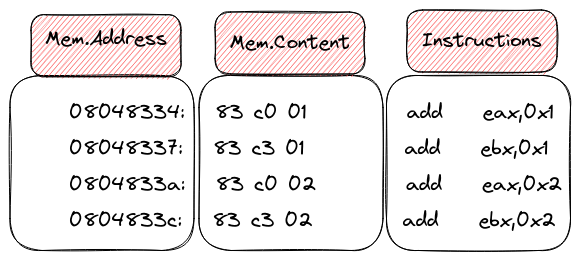

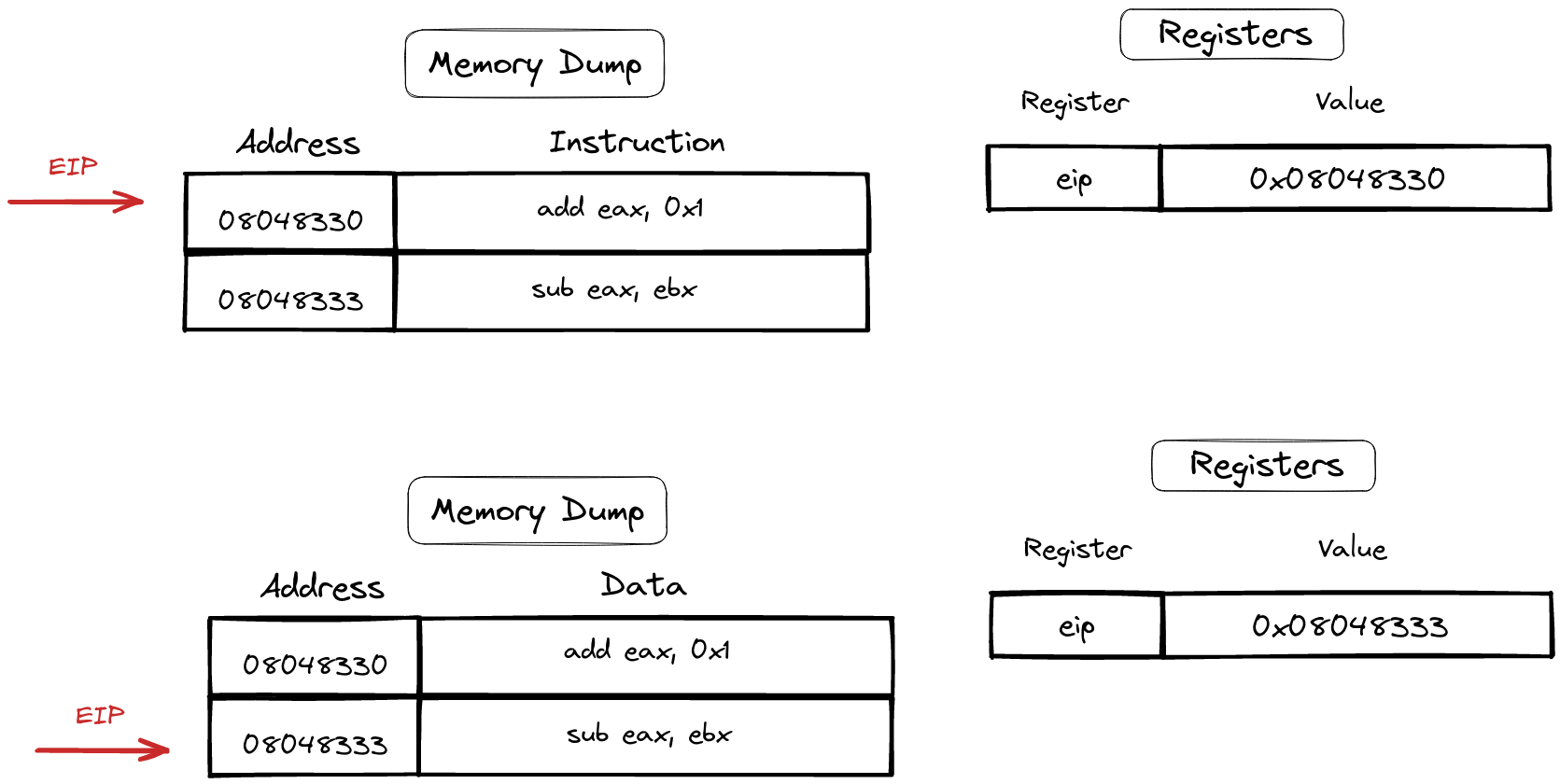

Instruction Pointer (rip, eip)

Meet the Instruction Pointer, a crucial register that holds the key to the next chapter in the processor’s script. Every time an instruction takes center stage, this register updates its value, pointing eagerly to the next instruction in line. Its journey involves increments, dictated by the size of the executed instruction.

Let’s break it down with an example: consider the instruction “add eax, 0x1,” a snippet of elegance stored in memory as “83 C0 01,” occupying a humble 3 bytes. Post-execution, the Instruction Pointer steps forward by 3, gracefully guiding the processor to the next act in this computational ballet. It’s a dance of bytes and pointers, choreographed by the rhythm of instructions.

Size of registers

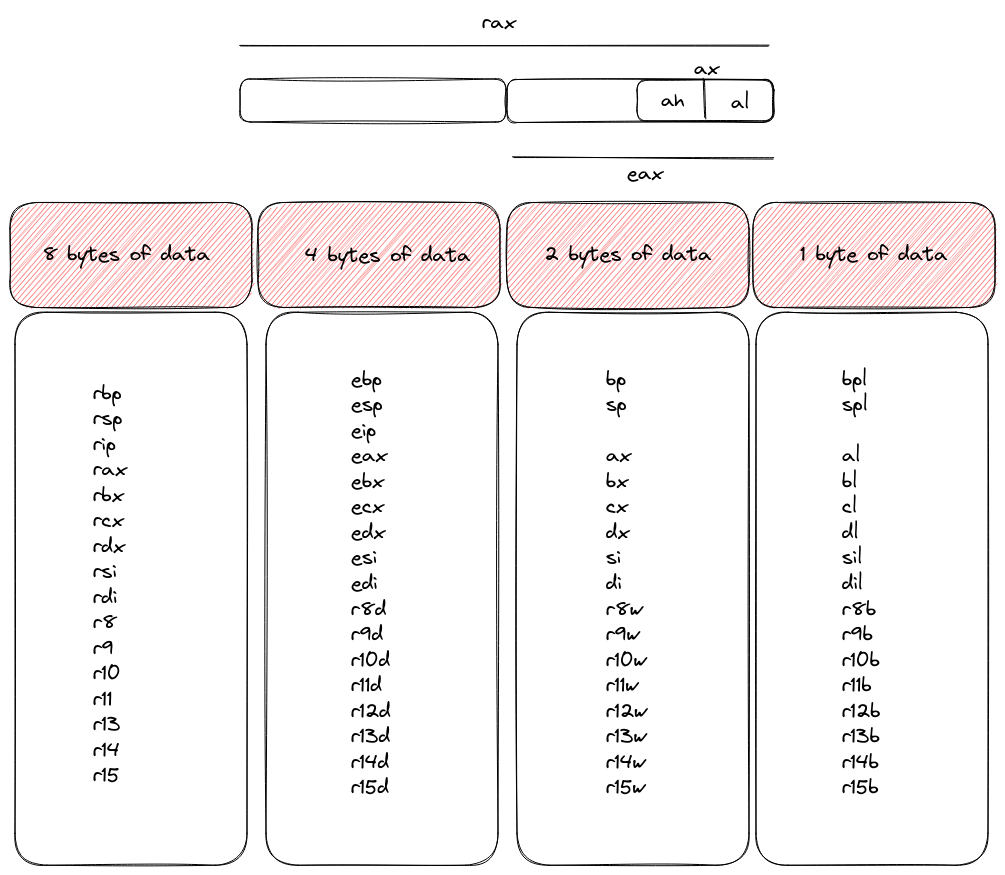

In the evolving landscape of computing architectures, a notable distinction between x32 and x64 systems emerges in the realm of register sizes. Picture registers as storage units for the processor, holding vital information for computation.

In the x32 realm, these registers have a cap at 4 bytes, reflecting the technology of its time. However, as we step into the more advanced x64 architectures, the registers double in size, boasting an expansive 8 bytes of storage capacity. This augmentation in register size signifies a technological leap, equipping processors with enhanced capabilities and paving the way for more sophisticated computing endeavors. It’s a tangible manifestation of progress in the ever-evolving world of technology.

Registers Size

As we navigate through this exploration, the terms “word,” “dword,” and “qword” will make frequent appearances, each carrying its own significance in the language of bytes.

In our lexicon, a “word” encapsulates 2 bytes, forming a compact unit of data. Stepping up in size, a “dword” extends to 4 bytes, providing a more substantial chunk of information. And finally, at the zenith of this byte hierarchy, a “qword” commands a generous 8 bytes, offering an expansive canvas for data storage.

These terms serve as our linguistic tools, allowing us to articulate and navigate the intricate tapestry of data in the digital realm. So, as we encounter “words,” “dwords,” and “qwords,” let’s appreciate the nuanced palette they bring to our understanding of data sizes.

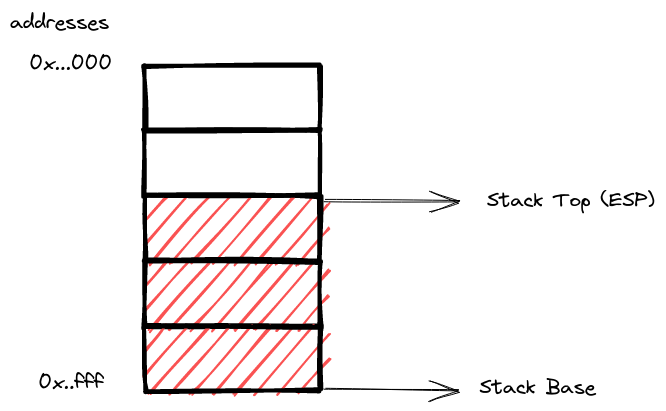

The stack

Now, let’s shine a spotlight on the stack—a dynamic region in the memory landscape that plays a pivotal role in every process. Think of the stack as a backstage crew, orchestrating data management with a Last In, First Out (LIFO) approach.

To interact with this backstage maestro, we employ two fundamental instructions: “push” and “pop.” “Push” gracefully adds elements to the stack, creating a neatly organized stack of data. Conversely, “pop” takes a bow as it elegantly removes elements, revealing the most recently added data.

Why does the stack take center stage? It serves as a temporary haven for data, housing everything from local variables and function parameters to return addresses. This intricate dance of push and pop ensures a seamless flow of information, a choreography vital to the performance of each process. It’s the backstage magic that keeps the show running smoothly.

Stack Pointer (rsp, esp)

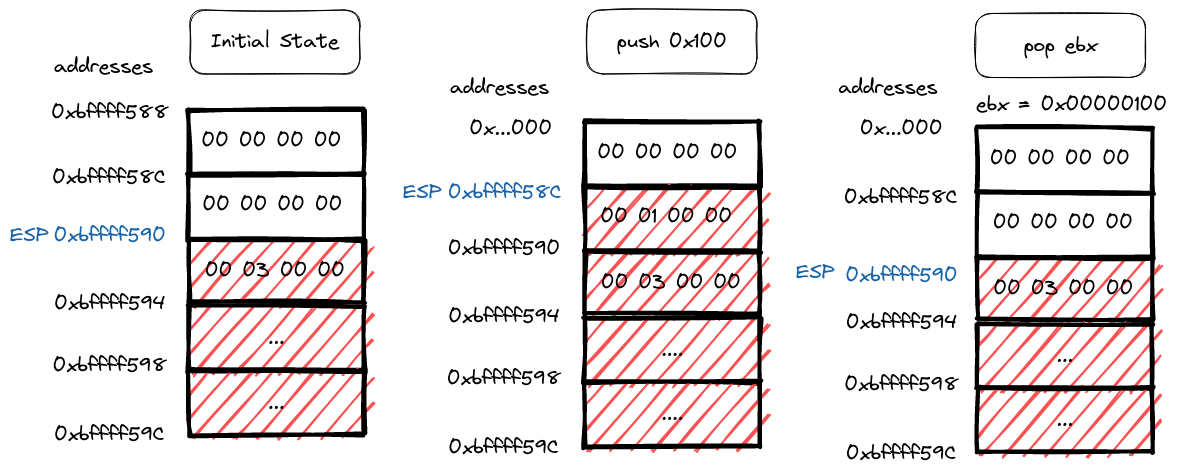

Now, let’s unveil the maestro behind the scenes—the Stack Pointer (rsp, esp). This register stands as the guardian of the stack, keeping track of the memory address at the very summit, where the last element resides.

Picture this: when a new member enters the stage through a “push” instruction, the Stack Pointer updates its address, ensuring it points to the newly welcomed guest. On the flip side, if the time comes for a graceful exit via a “pop” instruction, the Stack Pointer orchestrates the removal of the topmost element, storing its value in the designated register. As this dance unfolds, the Stack Pointer gracefully adjusts its address, synchronizing with the ebb and flow of the stack.

In the world of 32-bit architecture, this dance is captured in a visual spectacle, showcasing the interplay between instructions and the Stack Pointer. It’s a mesmerizing ballet where addresses and values waltz in harmony, bringing order to the dynamic stage of memory management

Push and Pop instructions

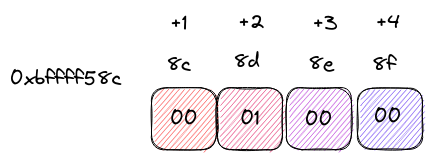

Continuing the exploration of this memory-endian dance, let’s delve into why “push 0x100” struts onto the stage as “00 01 00 00” in little-endian fashion. The magic lies in the computer’s chosen method of storing data in memory.

Picture it like a carefully choreographed routine—little-endian style. The smallest memory address eagerly embraces the least significant byte, leading the procession up to the grand finale with the most significant byte taking its place.

This dance is a deliberate choice, optimizing memory storage and retrieval for the intricate performances that unfold within the computer’s circuits. It’s a reminder that in the digital realm, even the arrangement of bytes follows a choreography designed for efficiency and elegance.

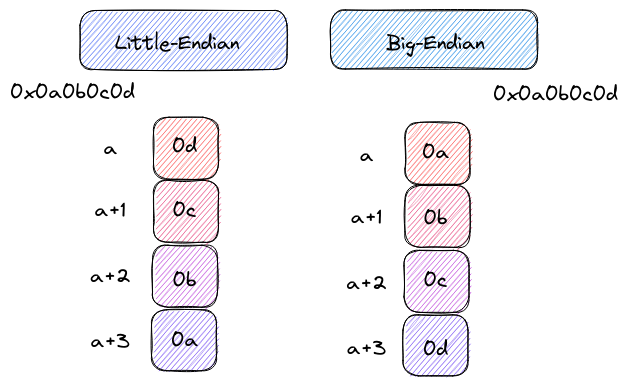

Little-endian vs Big-endian

Now, let’s shed light on how memory addresses gracefully guide us to the various cells adorned with data. Imagine this as a visual voyage, and to make it more accessible, let’s turn to a simple yet illuminating diagram:

memory address to data relation

In this visual narrative, each memory address serves as a map coordinate, pointing to a specific cell where data is elegantly stored. It’s akin to navigating a grid, where the address acts as a guide, leading us to the precise location of the digital treasures.

This visual metaphor is our compass, helping us decipher the intricate language of memory addresses and their correlation with the rich tapestry of data. So, as we traverse the digital landscape, let this diagram be our trusted guide in understanding the spatial poetry of memory.

Instructions

Let’s step into the realm of instructions—a symphony of commands that govern the dance of binary code. While we’ve touched on the graceful movements of “push” and “pop,” there’s a whole ensemble of instructions awaiting our exploration when analyzing assembly code.

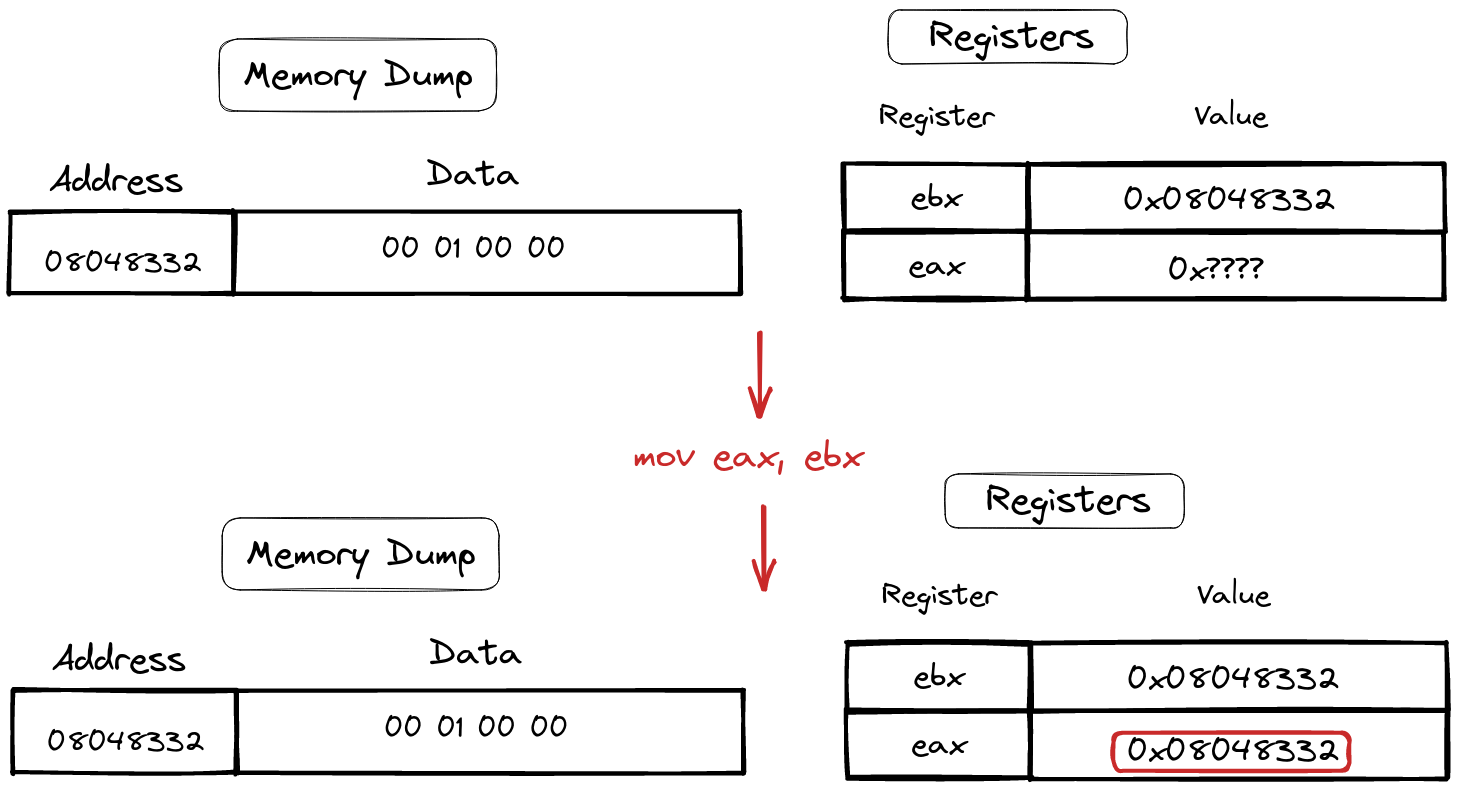

Mov instruction

Meet the maestro, the “mov” instruction, orchestrating the art of data movement between registers. In its simplest form, it elegantly transfers data from the ebx register to the eax register, a seamless exchange in the processor’s memory ballroom.

mov eax, ebx

Sample of mov instruction

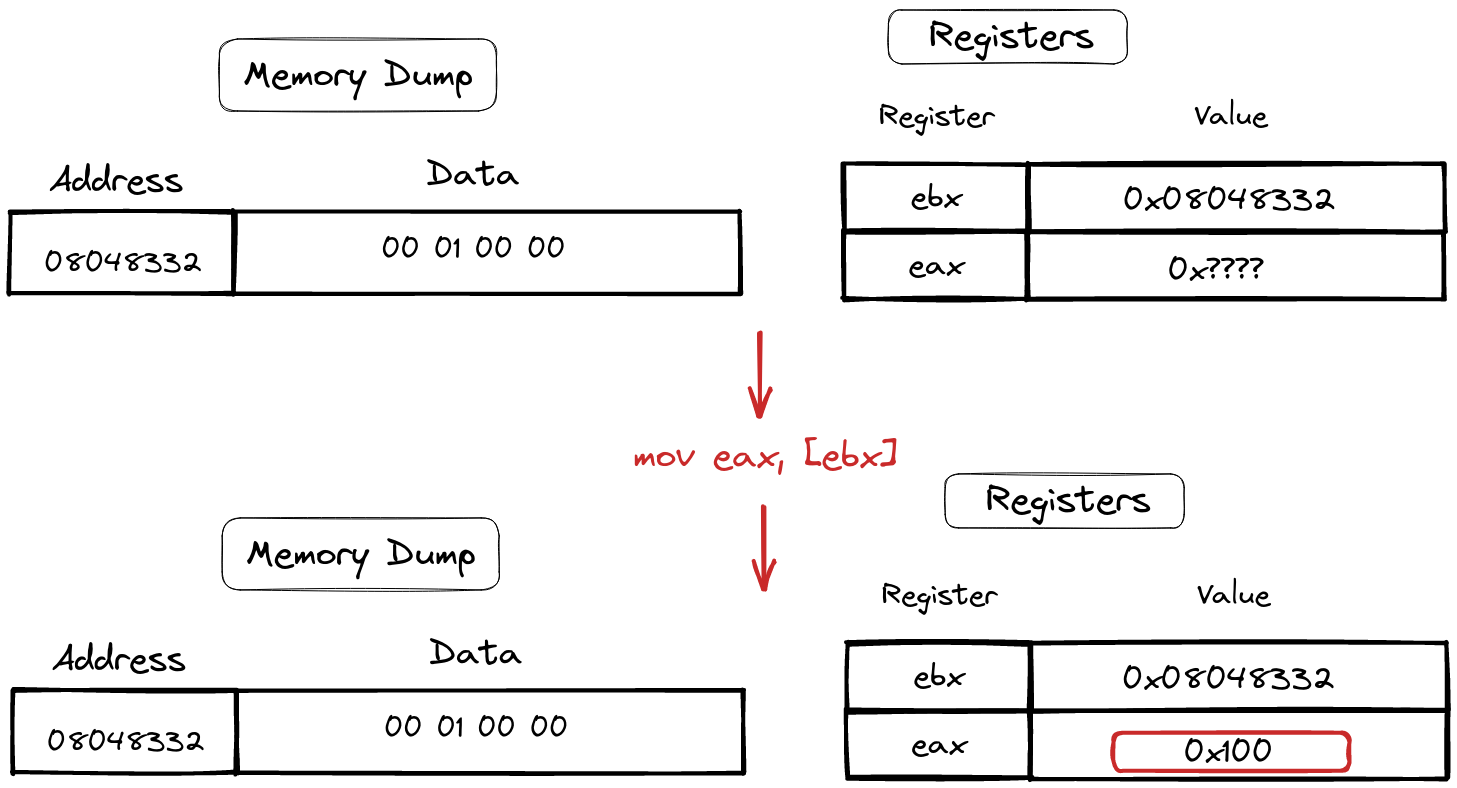

Yet, “mov” possesses a more nuanced choreography. It can also perform a pas de deux with data by employing what we call “dereference.” This term unveils a dance with memory addresses, guided by pointers. Imagine a pointer as a curator, pointing to data in the vast museum of memory. The syntax for this intricate dance is demonstrated below:

mov eax, [ebx]

mov [ebx], eax

This dance of “mov” with dereference mirrors the way high-level languages access array data (like array[3]). It’s a blend of elegance and functionality, enriching our understanding of how data twirls and pirouettes in the language of assembly.

Sample of deference

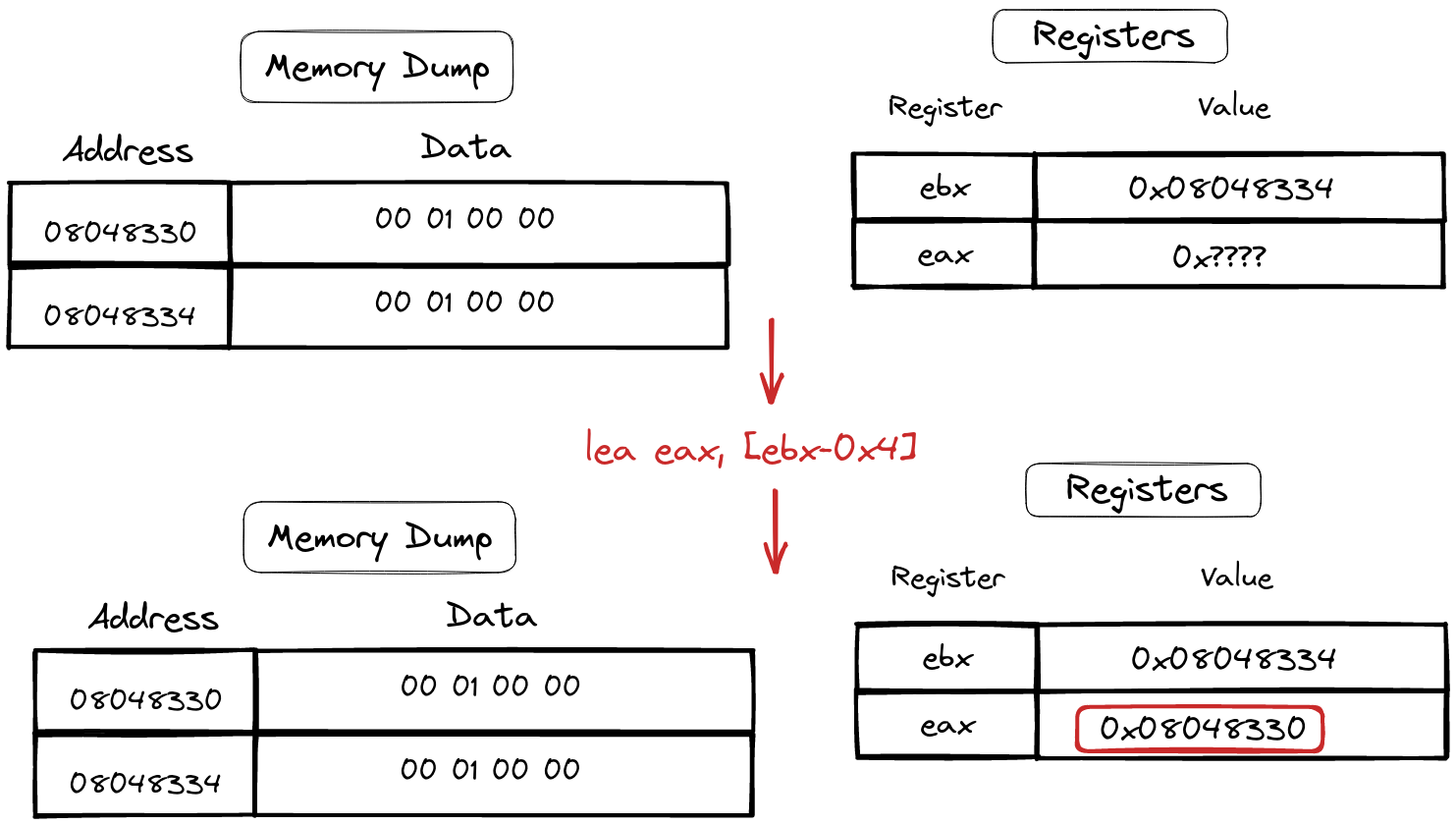

Lea instruction

Introducing the “lea” instruction—a maestro specializing in loading memory addresses onto the stage. With a syntax reminiscent of a well-rehearsed routine:

lea eax,[ebx-0x4]

In the dance of assembly code, “lea” gracefully takes the memory address provided by the source register (here, ebx), twirls it with a specified offset (-0x4), and elegantly places it into the destination register (eax).

For those familiar with high-level languages like C, envision “lea” as a virtuoso akin to ”&” in function. It’s a subtle yet powerful move, akin to taking the address of a variable in C, revealing the intricacies of memory navigation in the world of assembly.

Sample of lea instruction

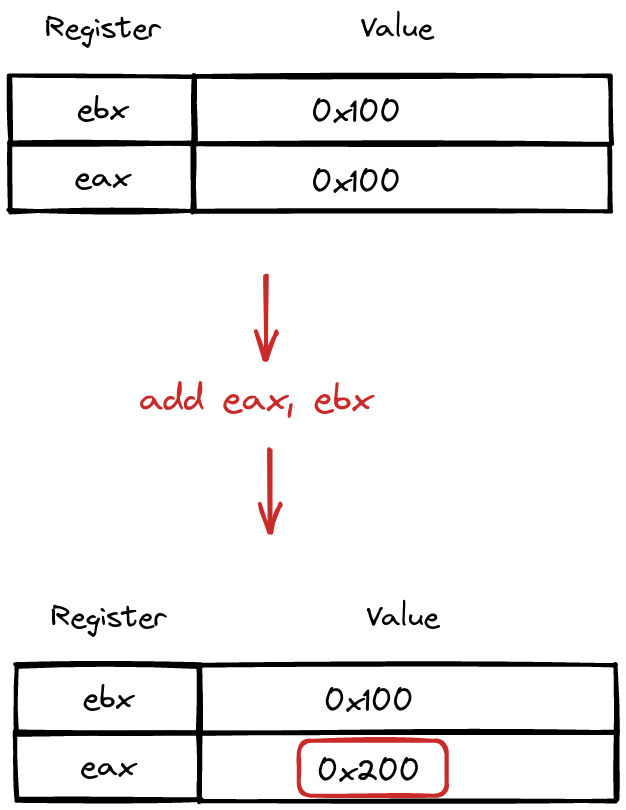

Add instruction

Enter the “add” instruction—a virtuoso in the arithmetic ballet of assembly code. With a simple yet impactful syntax:

add rax, rdx

In this elegant move, “add” takes the stored values from the rax and rdx registers, orchestrates a seamless addition, and then gracefully deposits the sum into the target register, here elegantly named rax.

It’s a choreography of numbers, a ballet of bits, where the addition of registers becomes a harmonious performance, enriching the computational tapestry of the processor.

Sample of add instruction

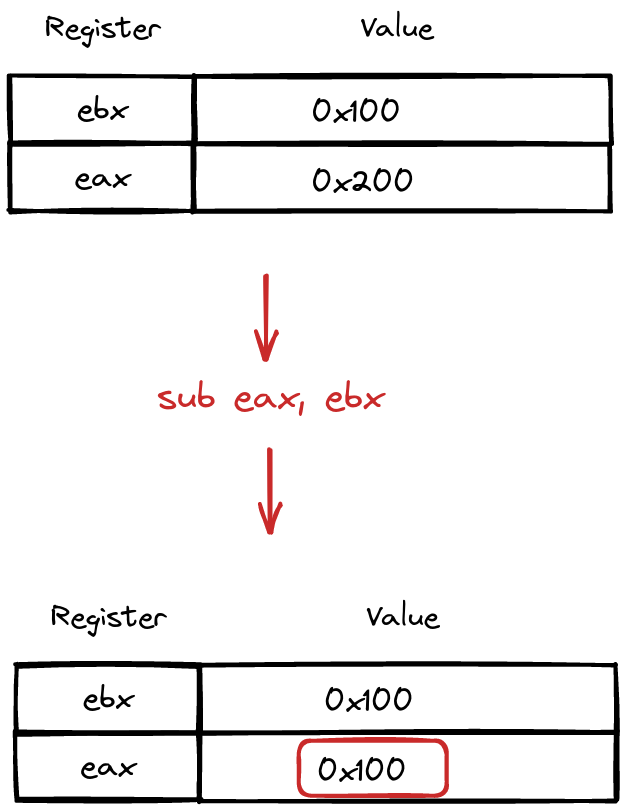

Sub Instruction

Now, let’s welcome the “sub” instruction to the stage—a luminary in the arithmetic theater of assembly code. Witness its graceful syntax:

sub eax, ebx

In this intricate move, “sub” takes center stage by subtracting the value stored in the ebx register from that in the eax register. The result of this subtraction, a dazzling numerical difference, is then delicately deposited into the target register, here embodied as eax.

It’s a performance of numerical finesse, a ballet of subtraction where registers gracefully interact, leaving behind a result that resonates in the computational symphony.

Sample of sub instruction

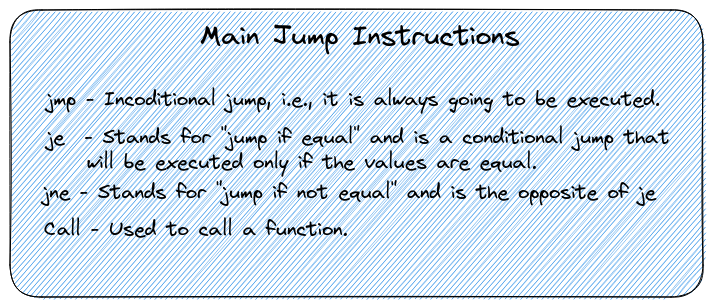

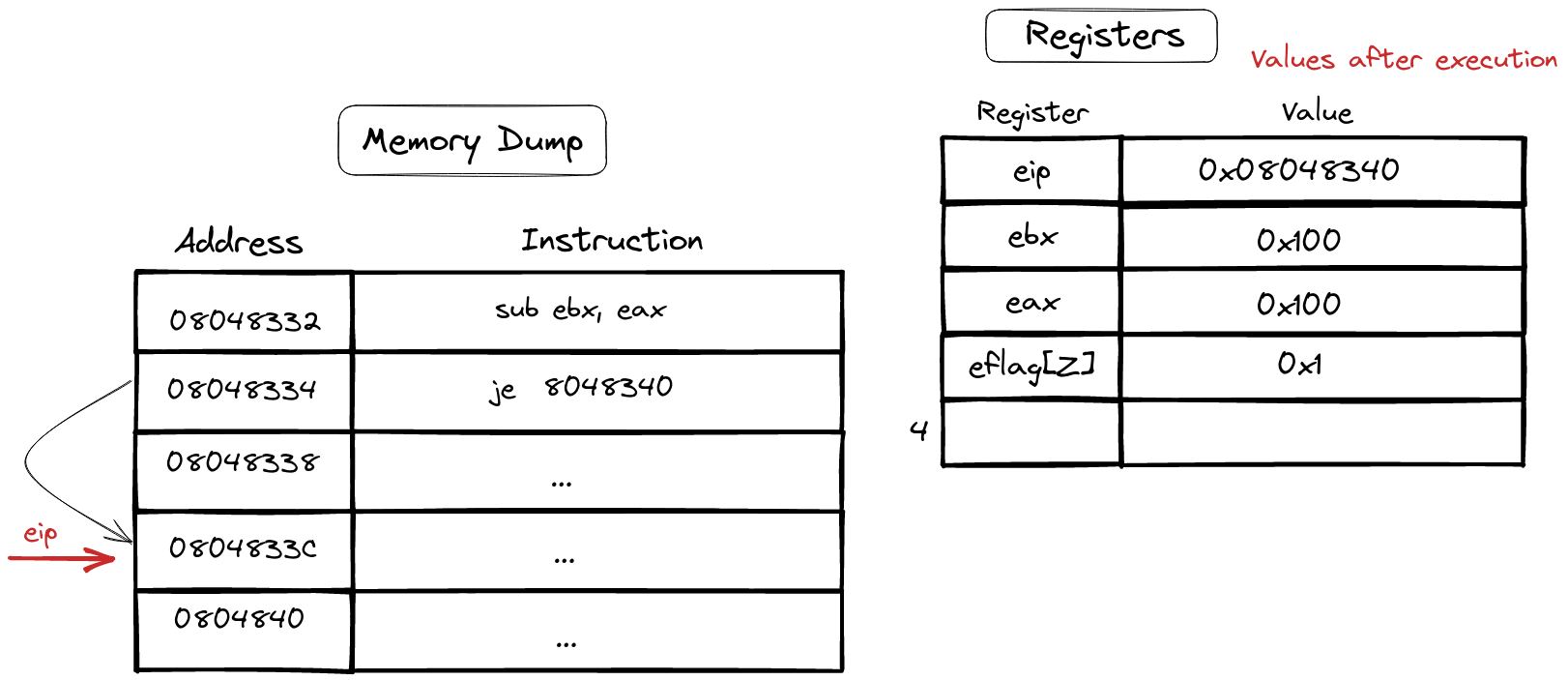

Jump instructions and flags

In the intricate realm of assembly, special instructions take the stage, wielding the power to modify the instruction pointer—the maestro guiding the flow of code execution. Let’s spotlight two primary categories of jump instructions: the unwavering unconditional, like “jmp” and “call,” and the nuanced conditional, such as “je” or “jne.”

In the ballet of conditional jumps, a crucial element steals the spotlight—meet the “flags.” These are housed in the special register, eflags for x32 or rflags for x64. Each bit in this register encapsulates control information, an orchestra of signals that various instructions can interpret.

One star among these flags is the “zero flag,” a prominent player in the drama of equality. When an operation results in 0—say, a subtraction in “sub ebx, eax”—the zero flag takes center stage, setting itself to 1. Enter the “je” instruction, which keenly observes this flag. If the subtraction yields equality (zero flag set to 1), “je” gracefully takes its cue, changing the instruction pointer to the memory address it signifies.

sub ebx, eax

je 804833C

Conclusions

And with that, we draw the curtain on the inaugural chapter of our Binary Exploitation journey! 🎉 In this act, we navigated the intricate landscape of assembly code, exploring the elegant dance of instructions, registers, and the magic of memory.

But fear not, for the stage is set for the next chapter. Join me as we unravel the secrets of function conventions and tiptoe into the captivating realm of reverse engineering. It’s a promise of more revelations and a deeper dive into the fascinating world of binary exploits.

I trust you enjoyed this opening act, and I eagerly await our next rendezvous. Until then, happy coding, and see you in the next installment! 😊🔍

Resources

Guía de auto-estudio para la escritura de exploits · Guía de exploits

Chapters

Next chapter

💬 Comments Available

Drop your thoughts in the comments below! Found a bug or have feedback? Let me know.