Katana in Action: Enhancing Security Audits Through Effective Web Crawling

12 min read

March 3, 2024

🚧 Site Migration Notice

I've recently migrated this site from Ghost CMS to a new Astro-based frontend. While I've worked hard to ensure everything transferred correctly, some articles may contain formatting errors or broken elements.

If you spot any issues, I'd really appreciate it if you could let me know! Your feedback helps improve the site for everyone.

Introduction

Welcome to a vital chapter in our series on enhancing web application security through advanced crawling techniques. This installment is dedicated to empowering auditors with the knowledge and tools necessary to uncover the hidden depths of web applications. By leveraging the powerful capabilities of Katana and exploring strategic methodologies, readers will gain insights into navigating the complex landscape of software vulnerabilities, TLS/SSL configurations, security headers, and application crawling. This chapter not only outlines the initial steps typically undertaken in security audits but also introduces advanced options for a more thorough examination, promising a significant advantage in the quest for comprehensive security assessments.

Methodology Overview

As we navigate through the various chapters of this series, I’ll highlight a structured series of checks to incorporate into your audits. This approach is designed to streamline your testing process and ensure comprehensive coverage of critical security areas. For today’s installment, I recommend focusing on the following key areas:

- Look at Vulnerabilities in Used Software: It’s essential to start by identifying and assessing the software your application relies on. This includes libraries, frameworks, and any third-party tools. Understanding the vulnerabilities in these components can provide early insights into potential security risks.

- Check TLS/SSL Settings: The configuration of TLS/SSL protocols plays a critical role in securing data in transit. Evaluating these settings ensures that your application is using strong encryption standards and is protected against eavesdropping and man-in-the-middle attacks.

- Configuration of Security Headers: Security headers are a fundamental aspect of web application security. They instruct browsers on how to handle your content safely, preventing a range of attacks. Ensuring these are correctly configured adds another layer of security.

- Application Crawling: Lastly, a thorough crawl of your application is indispensable. It helps map out the application’s structure, revealing the full scope of what needs to be tested. This includes identifying hidden endpoints and resources that could be potential targets for attackers.

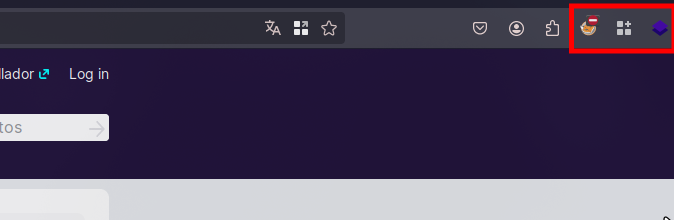

Essential Firefox Setup

To kick things off, we’re going to set up Firefox for performing audits. You’re welcome to use another Chromium-based browser, but it’s worth noting that one of the plugins we’ll be discussing isn’t available for those browsers just yet.

First up, and most importantly, is FoxyProxy. This plugin is crucial for configuring the proxies we’ll be utilizing. We’ll dive deeper into its functionality and how it operates in upcoming chapters.

Next on the list is Firefox Containers. This handy plugin makes conducting various authentication and authorization tests a breeze. Essentially, it lets you keep multiple tabs open, each with cookies from different users, facilitating privilege and access control testing. If you’re curious to see it in action, I’ve used it in an article which you might find insightful.

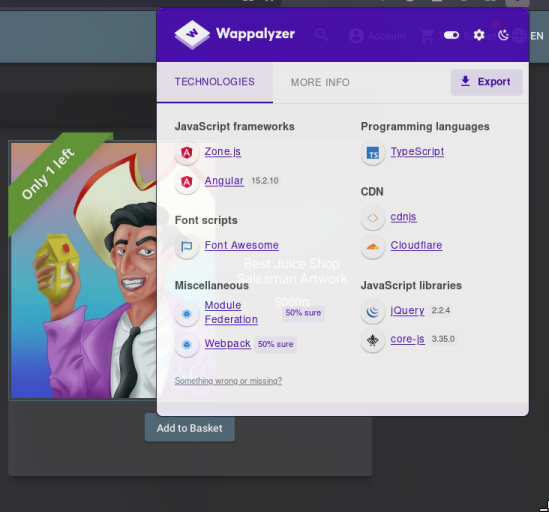

Last but not least, we have Wappalyzer. This tool is invaluable for quickly identifying the software versions a website is running. While it might not be the star of the show during this series, in real-life scenarios, pinpointing software with known vulnerabilities is absolutely critical.

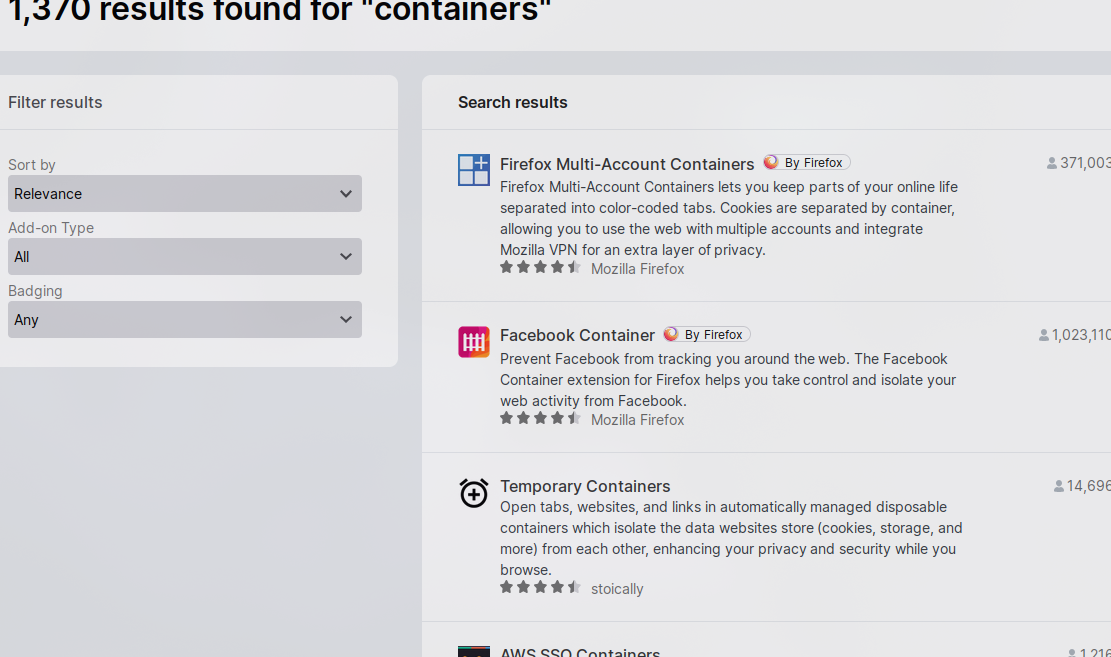

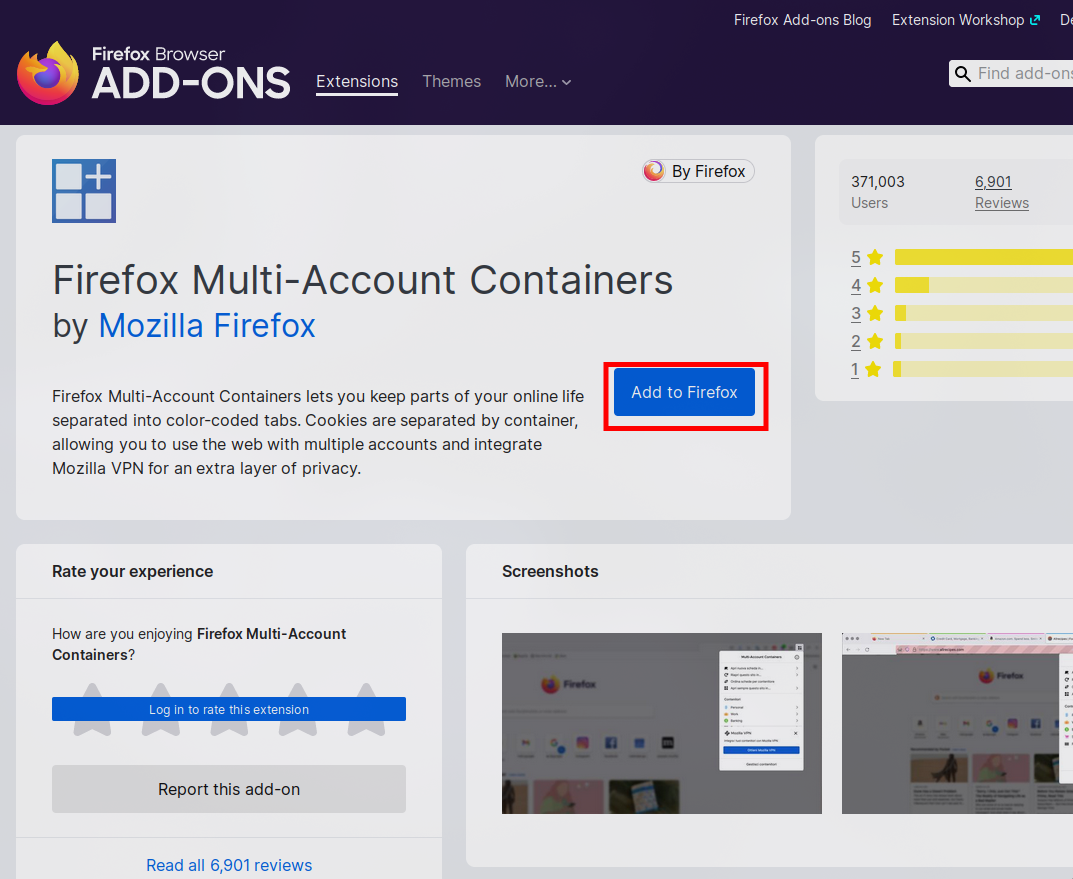

Required plugins

Installing these plugins is a straightforward process, similar to adding any other plugin to Firefox. Just search for them in the Firefox Add-ons Store and click install. It’s as simple as that!

Search in the Firefox store

Add a plugin

Technological Reconnaissance

The first thing we will do, will be to take a look at the software used and as we can see the main thing we have to look at is that it uses angular, jquery and core-js. This will condition certain processes that are usually done during an audit and that we will see during the series as well as in this chapter. On the other hand, in a conventional pentest, we will look for each software version and try to see if they contain public vulnerabilities.

Technologies used detected with wappalyzer

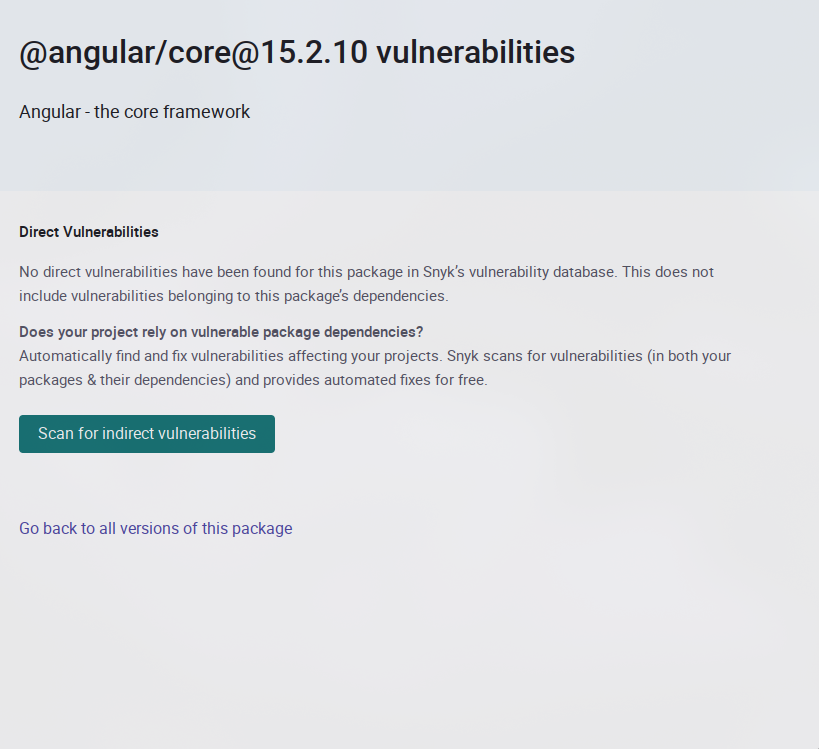

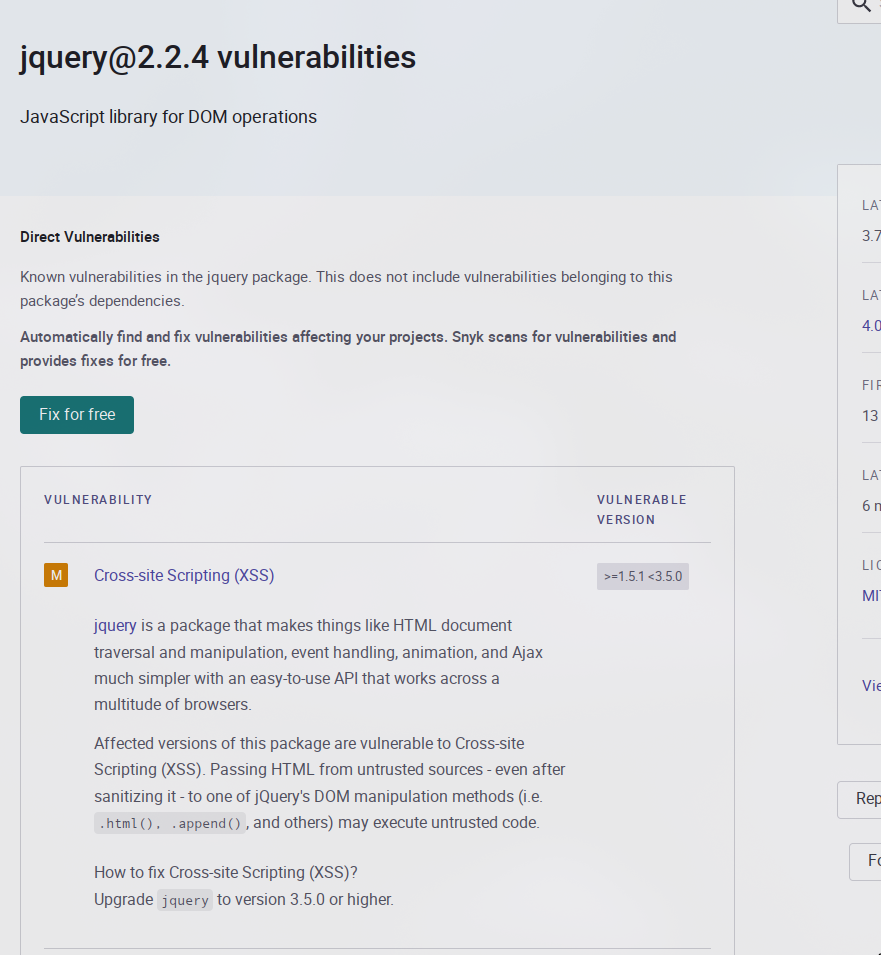

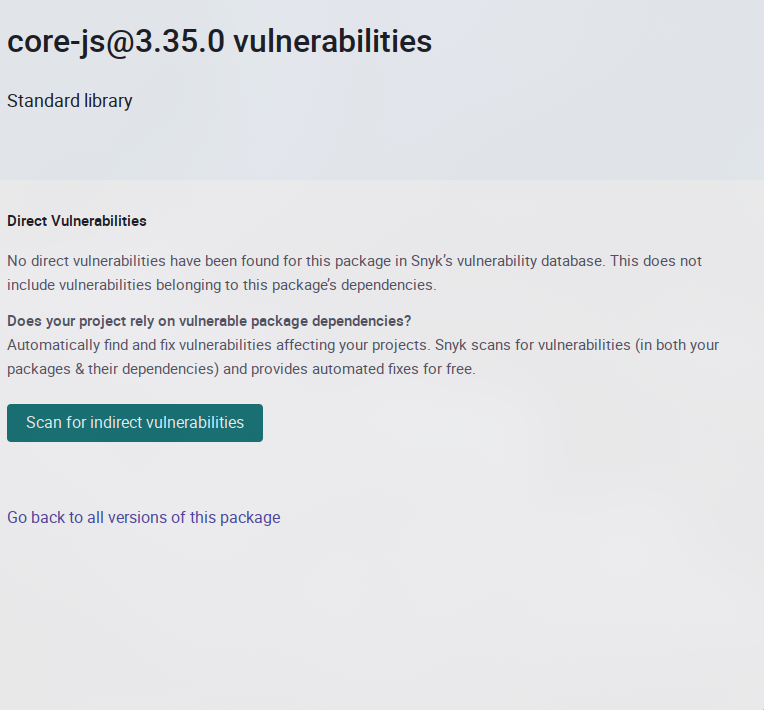

In this case, as we can see, only jQuery contains vulnerabilities. We will have to indicate this to the client as a new vulnerability. It should be noted that we do not need to exploit it as such, simply indicating it as detected in the report would be enough (to find the details just google software and its corresponding version).

Angular without vulnerabilities

Jquery with vulnerabilities

Core-js with vulnerabilities

Core Security Checks

In addition to the challenge of keeping software libraries up to date, two critical aspects are commonly scrutinized during web application audits:

- TLS/SSL Certificates: It’s essential to ensure that the security protocols safeguarding data transmission are robust and functioning correctly. For this purpose, we often turn to a tool called testssl. This utility automates the evaluation process, meticulously checking the application’s adherence to these security protocols. By using

testssl, we aim to provide our clients with peace of mind, confirming that their data encryption standards meet current security benchmarks. - Security Headers: Another key area of focus is the configuration of security headers within the web application. These headers, such as

Strict-Transport-Security,X-Content-Security-Policy, and others, play a pivotal role in fortifying the application against various vulnerabilities. They work by instructing the browser on how to behave when handling the site’s content, significantly reducing the risk of security breaches. To assess the effectiveness of these security measures, we utilize a tool called shcheck. This tool scans the application’s headers, providing a clear overview of its security posture and highlighting areas for improvement.

The art of Application Crawling

After completing the initial checks, it’s highly beneficial to perform a web crawl to discover the various types of links present on the webpage. This preliminary exploration is crucial as it lays the groundwork for further automating scanning processes or conducting vulnerability scans. By identifying the links and resources associated with the web application early on, we can streamline our approach to security assessments.

Crawling the web application serves as an initial survey, providing us with valuable insights into its structure and content. This step is crucial for mapping out the application’s landscape, which, in turn, enables us to tailor our security testing strategies more effectively. The insights gained from this process help in automating subsequent scans, ensuring a thorough and efficient assessment.

This initial crawl is just the beginning. It will be complemented by a more detailed examination conducted through our proxy, allowing us to compile a comprehensive list of targets within the application’s scope. Together, these methods ensure that no stone is left unturned in our quest to secure the web application.

There are numerous tools available for carrying out this process, each with its own set of features and capabilities. While you can explore a variety of these tools through recommended links, I personally prefer using “katana” from Project Discovery. Katana stands out due to its efficiency and the depth of analysis it offers, making it an invaluable asset in our security toolkit. In this article, we’ll dive deeper into how Katana facilitates our web crawling objectives, highlighting its features and demonstrating its application in real-world scenarios.

Leveraging Katana

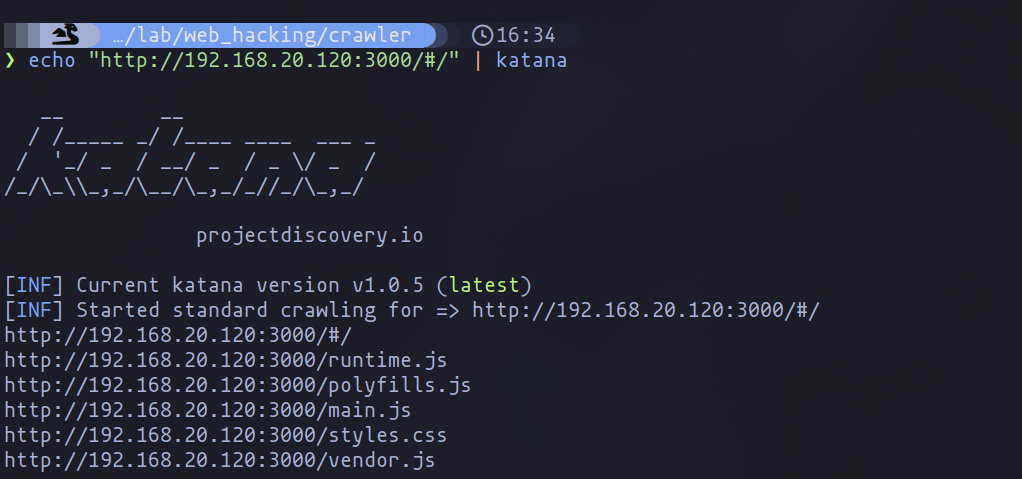

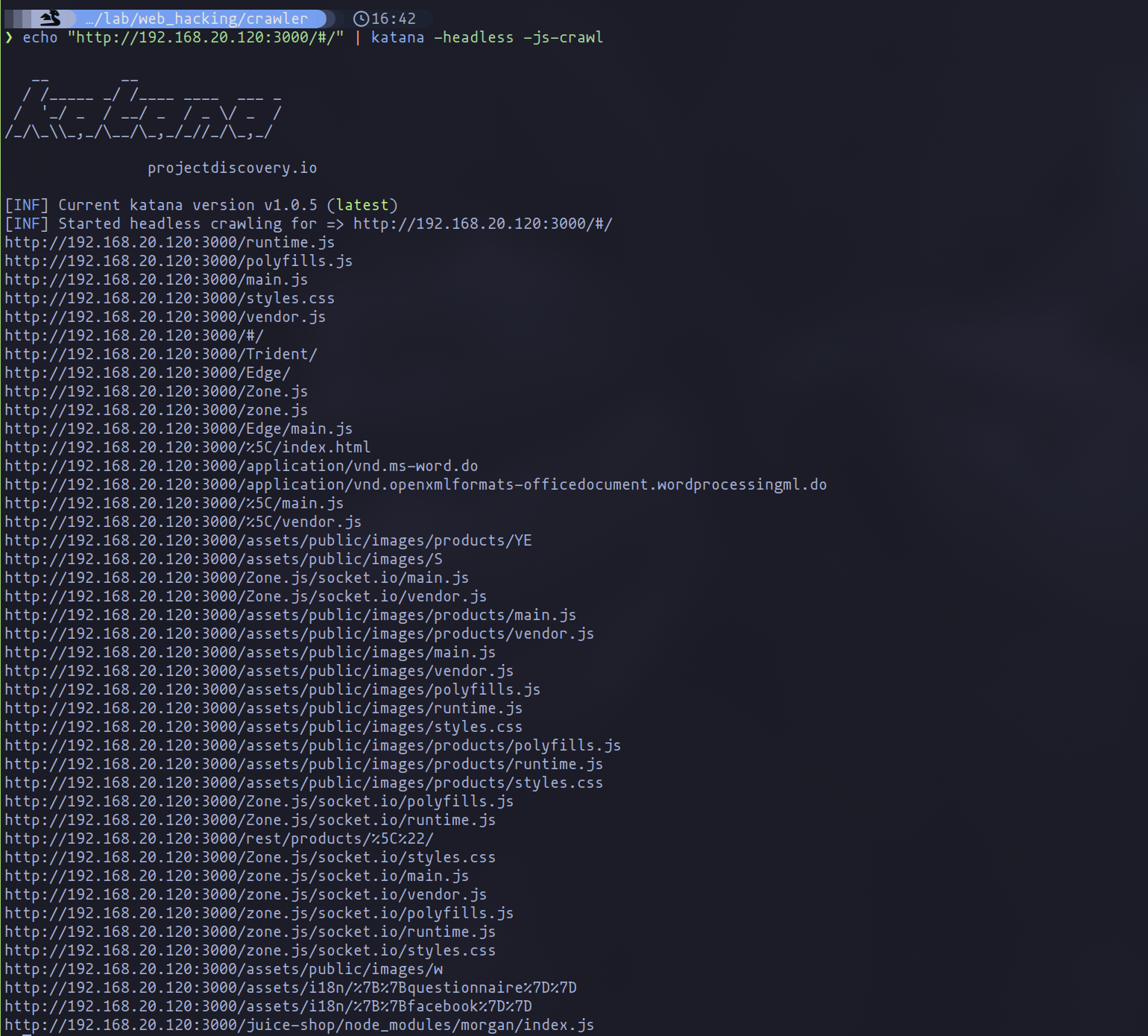

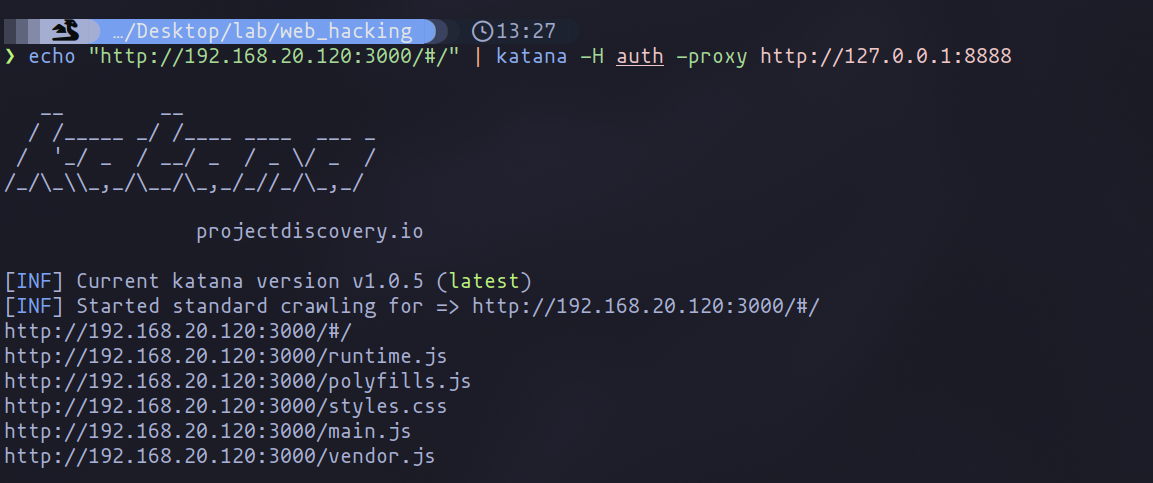

With the groundwork laid and the importance of a detailed web crawl established, engaging Katana becomes our strategic move to unearth even more about our target’s digital terrain. Let’s initiate this journey with a simple command:

echo <url> | katana

Normal katana execution

Utilizing Katana with its default settings offers a glimpse into the application’s link structure, yet our ambition drives us to seek a comprehensive view. To encapsulate the breadth of potential vulnerabilities, we enhance our toolkit with specific flags that amplify our discovery process.

Enhancing Discovery with Headless Mode

In the context of modern web applications, particularly Single Page Applications (SPA) that dynamically load content, the -headless flag becomes an indispensable tool in our arsenal. By activating this flag, we leverage the robust capabilities of the Chromium engine. This strategic move is crucial for applications like the one we’re testing, where content is dynamically generated and traditional crawling methods fall short.

echo <url> | katana -headless

We use the parameter headless

Utilizing Katana in headless mode allows us to simulate a real user’s interaction with the application, bypassing the limitations that prevent standard crawlers from accessing dynamically loaded content. This command adjustment is transformative, unveiling a wealth of links that would otherwise remain concealed.

Maximizing Results with JavaScript Crawling

Our quest for exhaustiveness leads us to the -js-crawl flag, enabling Katana to scrutinize JavaScript files for hidden links:

katana -headless -js-crawl

Maximize encounters by looking in JavaScript files

This configuration is my standard for ensuring no potential link is overlooked, setting the stage for a detailed vulnerability assessment. After collecting a comprehensive set of data, I utilize grep to sift through the findings, focusing specifically on the links that align with our security assessment goals. This process of manual refinement is essential for isolating relevant vulnerabilities from the broader dataset, ensuring our efforts are as targeted and effective as possible. While Katana’s capabilities extend beyond these commands, including options for targeted filtering and output customization, I encourage a dive into its official documentation to discover how best to tailor its use to your needs.

Diving Deeper: Advanced Options

In addition to the foundational strategies we’ve explored, there are several intriguing options worth considering to further refine our web crawling efforts. Among these, two advanced flags stand out for their potential to significantly deepen our exploration:

- The

-dflag offers the capability to adjust the crawl’s depth, striking a perfect balance between thorough exploration and efficient time management. This adaptability is invaluable, allowing us to customize the depth of our crawl to meet the unique requirements of each security assessment and ensure a comprehensive exploration of the web application. - The

affflag, an experimental feature, aims to simulate user interactions, opening the door to discovering links that might remain hidden under normal circumstances. This approach can unveil vulnerabilities accessible only through specific user behaviors, providing a richer, more detailed perspective on the application’s security landscape.

Authenticated Crawling

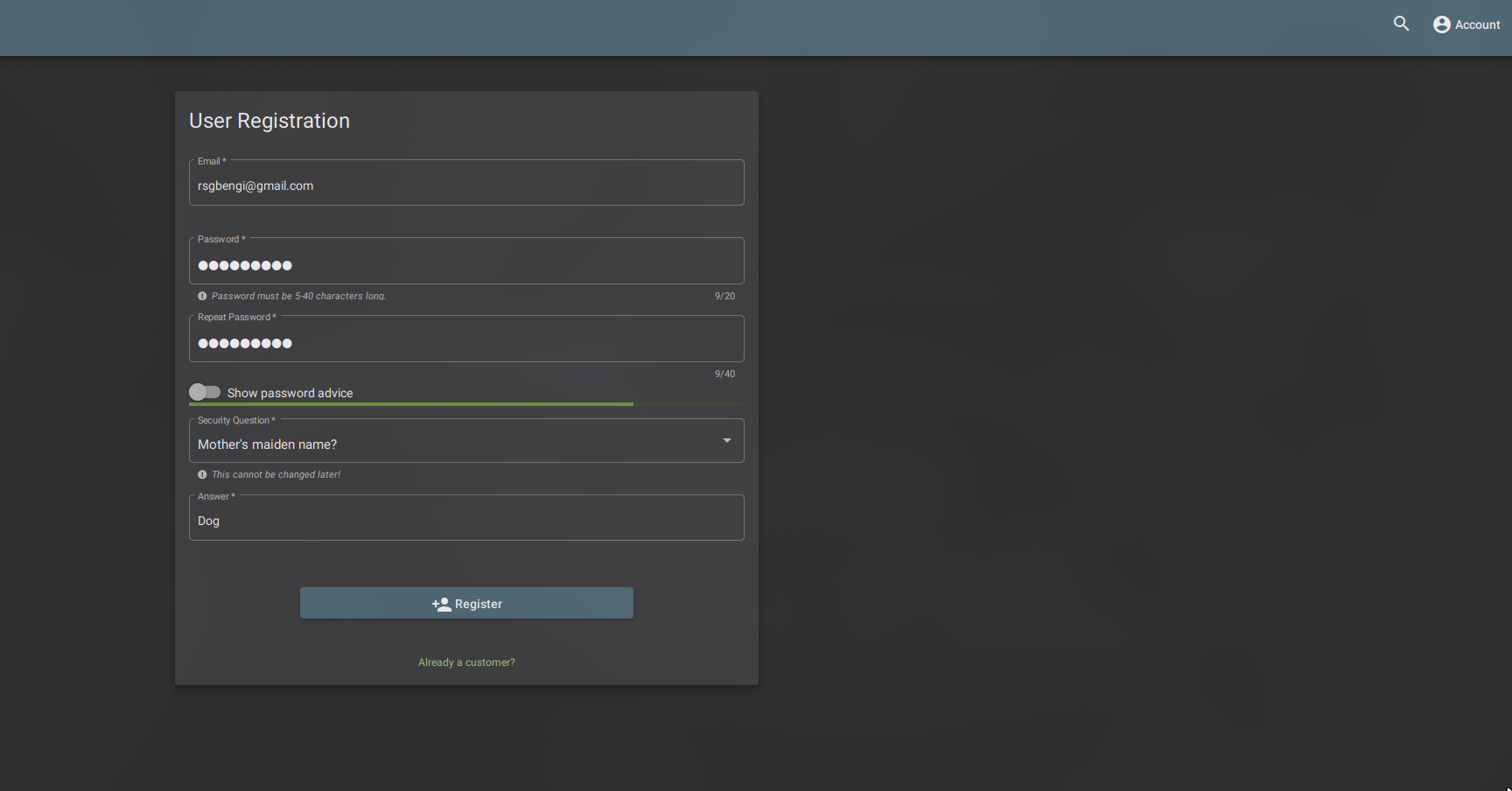

In the comprehensive process of web application security auditing, an essential technique involves enhancing our crawling capabilities by integrating authentication cookies. This step is crucial as it unveils links and resources accessible only after authentication, offering deeper insights into the application’s security landscape. The journey begins with registering on the application, which, in the case of JuiceShop, involves obtaining a JWT (JSON Web Token). This token is vital for authenticating against the application’s API, and I have delved into its specifics in a dedicated chapter of the Hacking APIs series.

User registration

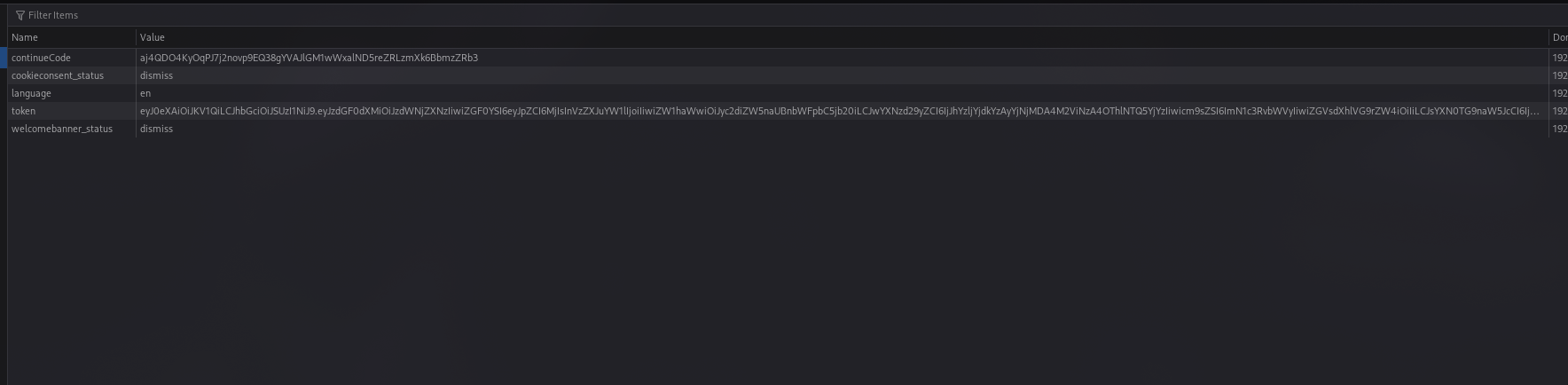

Retrieving this JWT can be achieved in a couple of ways. You could use the browser’s developer tools, accessed with the F12 key, to inspect the cookies directly. Alternatively, proxy tools such as mitmproxy, Burp Suite, or OWASP ZAP can be employed to capture the necessary requests and thus obtain the tokens and cookies used in authenticated sessions.

Cookies in Firefox

Cookies in mitmproxy

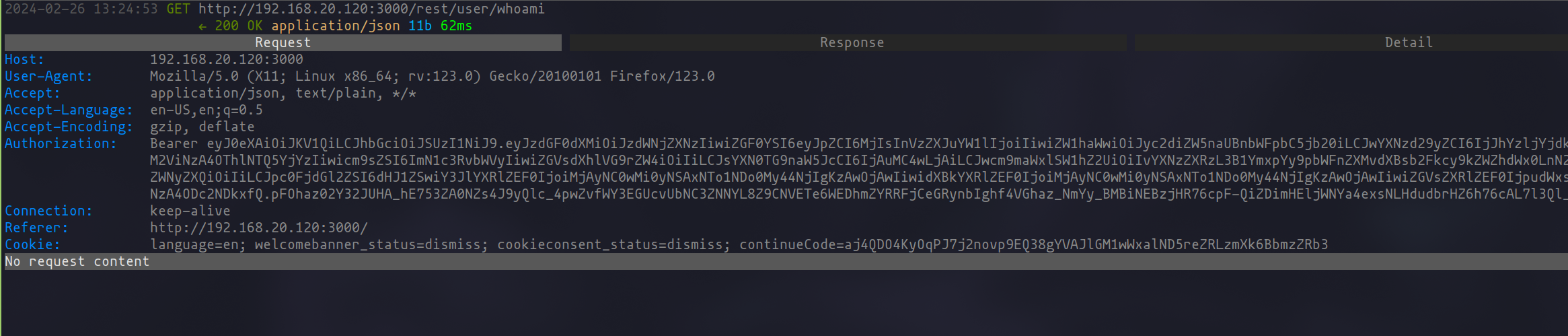

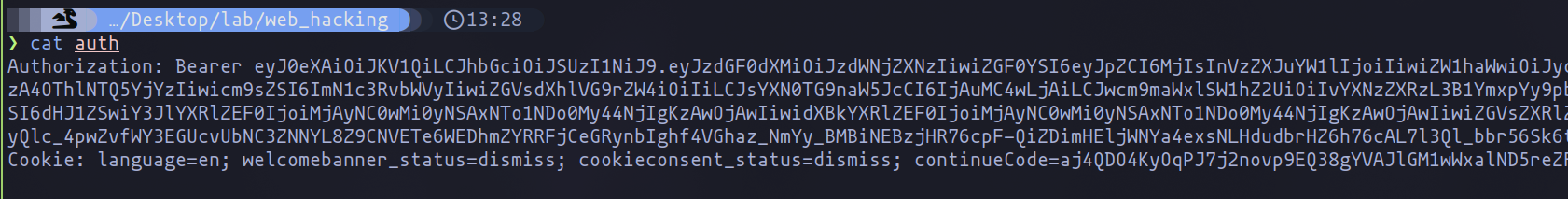

Once the JWT is in hand, the next course of action is to export this token, along with any relevant cookies, into a file. This file then serves as a bridge to the next step of our process. By feeding this information into Katana’s crawling mechanism with the -H argument, we ensure the inclusion of the authentication header in our crawl. To guarantee that the integration works as intended, I recommend using the -proxy argument for debugging purposes. This allows for real-time monitoring of the requests, affirming that the authentication details are correctly applied to the tool’s operations.

Cookies on file

The effectiveness of this method is evidenced in the seamless integration of authentication cookies into Katana, as would be illustrated in an accompanying image.

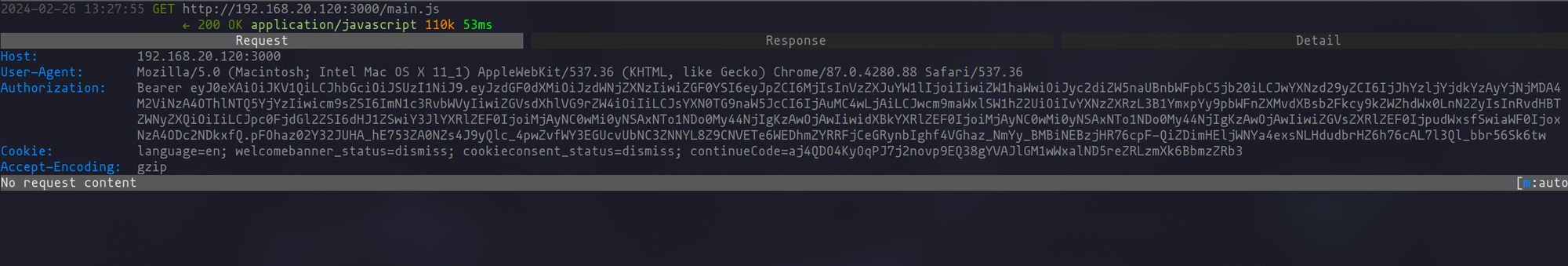

Requests with authentication using Katana

Though the procedure may appear direct and uncomplicated, the critical practice of debugging requests to ensure that session cookies are effectively passed to Katana’s requests cannot be overstated. This step is essential in web application security auditing, as it verifies the authenticity and effectiveness of our crawling efforts.

Visualization that the requests is made successfully

The Power Of Parameters

In our discussions on optimizing the use of katana for web application security assessments, I’ve emphasized my general approach of not applying filters directly within katana. However, there exists a particularly useful parameter that, under certain circumstances, merits consideration. This parameter shines when automating the detection of vulnerabilities, and it’s one we’ll be leveraging in our future explorations.

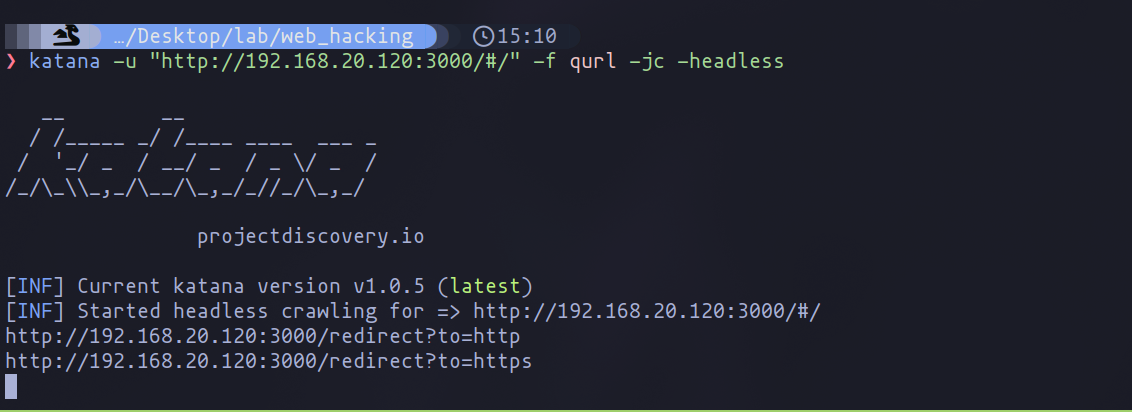

The parameter in question is -f qurl. Its primary function is to hone in on parameters within GET requests—specifically, those that utilize the ”?” character to delineate parameters. This focus is invaluable because it allows us to narrow down our examination to the points within an application where user input is directly processed in the URL, often a hotspot for potential vulnerabilities.

To apply this parameter alongside others for a comprehensive and targeted analysis, the command structure would look something like this:

katana -u "<url>" -f qurl -jc -headless

Links found with parameters

Conclusion

As we close this chapter, we reflect on the comprehensive journey through the landscape of web application security. The sophisticated art of web crawling, enriched by strategic methodologies and the adept use of Katana, has prepared us to face the complexities of modern web applications. This exploration goes beyond mere detection, enabling us to understand and mitigate the myriad vulnerabilities that challenge the security of the digital world. It’s a testament to the evolving nature of security auditing, urging us to constantly seek out new tools and techniques to safeguard our digital frontiers.

Additional Resources

GitHub - projectdiscovery/katana: A next-generation crawling and spidering framework.

GitHub - vavkamil/awesome-bugbounty-tools: A curated list of various bug bounty tools

Chapters

Previous chapter

Next chapter